Taming the Ralph Part 2: AI Coding Agents in Practice

What we actually learned after letting Claude loose on our codebase for a day. Loop safety controls, prompt engineering patterns that work, and bridging automated and manual testing.

In Part 1, we explored the theory of running AI coding agents in autonomous loops. Now, after a day of actually doing it, here’s what we learned—warts and all.

The gap between theory and practice

Our first loop runs were… disappointing. The agent would analyze code beautifully, make sensible changes, then skip straight to committing without running any tests. The prompt said “run tests,” but Claude interpreted that as optional guidance rather than a hard requirement.

Lesson 1: AI agents treat instructions like suggestions unless you make them unmissable.

We went from this:

### 5. Run Tests

Run tests before committing.To this:

### 5. Run Tests (MANDATORY - DO NOT SKIP)

**STOP: You MUST run these commands before proceeding to step 6.**

Run the following commands using the Bash tool NOW:The key changes:

- Added “MANDATORY” and “DO NOT SKIP” to the heading

- Used a stop emoji as a visual interrupt

- Changed “Run tests before committing” to “Run NOW”

- Explicitly said to use “the Bash tool” (not just “run”)

After these changes, test compliance went from ~20% to ~95%.

The manual testing queue

Some components simply can’t be tested automatically:

- Tooltips that only appear once per user

- First-time modals gated by localStorage flags

- Admin features requiring specific permissions

- Dynamic content that depends on external data

Rather than skip these components entirely, we created a manual testing queue:

## component-name - 2025-01-02

**Status:** [ ] Pending manual test

### Change Made

- Description of the optimization applied

### How to Test

1. Navigate to the relevant page

2. Trigger the specific interaction

3. Verify the component behaves correctly

### Why Automated Tests Can't Cover This

- Explain the limitation (one-time display, requires specific state, etc.)The prompt now writes to this queue whenever it optimizes a component that can’t be automatically verified. After a loop run, we review the queue and test manually.

Lesson 2: Not everything can be automated. Build a bridge between automated and manual testing.

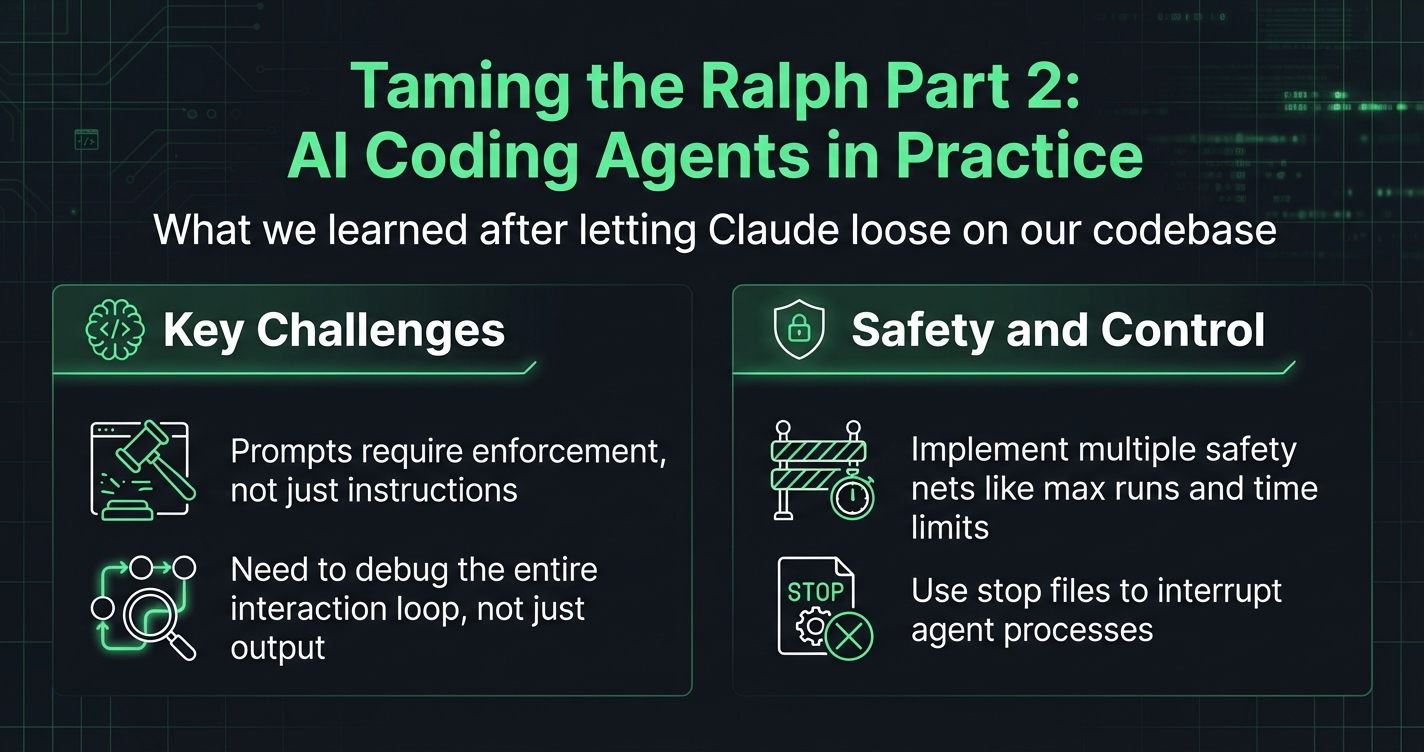

Loop safety controls

Running an AI agent in an infinite loop on your codebase requires safeguards:

The stop file pattern

rm -f ~/STOP_LOOP

while [ ! -f ~/STOP_LOOP ]; do

echo "=== Run $RUN ==="

cat PROMPT.md | claude -p --dangerously-skip-permissions

((RUN++))

doneTo stop gracefully between iterations: touch ~/STOP_LOOP

This is much cleaner than Ctrl+C, which might interrupt mid-commit.

Combined safety nets

Our production script uses three conditions:

MAX_RUNS=10

MAX_HOURS=4

END_TIME=$(($(date +%s) + MAX_HOURS * 3600))

while [ $RUN -le $MAX_RUNS ] &&

[ ! -f ~/STOP_LOOP ] &&

[ $(date +%s) -lt $END_TIME ]; do

# Run iteration

doneStops when ANY condition is met:

- Reached maximum iterations

- Exceeded time limit

- Stop file created

Lesson 3: Defense in depth applies to automation too.

The summary step

After the loop completes, we ask Claude to summarize what happened:

# After loop ends

SUMMARY_PROMPT="Summarize the last 10 git commits and:

1. List components that were changed

2. Check MANUAL_TESTING_QUEUE.md for pending tests

3. Note any failures or reverts"

echo "$SUMMARY_PROMPT" | claude -p --dangerously-skip-permissionsThis gives us a clear action list without manually reviewing each commit.

Lesson 4: The loop’s output should tell you what to do next.

What the agent actually produced

After a day of running, our agent:

- Optimized 8 components with performance improvements

- Identified 5 components requiring manual testing

- Reverted 1 change that broke tests

- Generated detailed commit messages explaining each change

The commit messages alone are valuable documentation:

perf(weather-display): optimize rendering performance

## What I Found

- Display component rendering weather data

- Multiple list iterations

- Pure presentational, no side effects

## What I Changed

- Added performance optimizations

- Improved list rendering efficiency

## Why This Is Safe

- All data passed via props

- No internal state mutations

- No complex lifecycle dependenciesPrompt engineering patterns that worked

The “Do X NOW” pattern

Instead of describing what should happen, command it to happen immediately:

"You should run tests before committing"

"Run the following commands using the Bash tool NOW:"The escape hatch pattern

Give the agent permission to bail out:

### Red Flags - Stop and Pick a Different Component If You See:

- Component has complex state management

- Component uses manual DOM manipulation

- You're unsure about any data flow patternThis prevents the agent from making risky changes just to complete the task.

The explicit tool pattern

Name the exact tool to use:

"Run the test script"

"Run the following commands using the Bash tool:"The checklist pattern

For complex decisions, provide a table:

| Pattern | Safe to Change? | Action Needed |

|---------|-----------------|---------------|

| Pure props/inputs | Yes | None |

| Manual subscriptions | Risky | Review carefully |

| External callbacks | No | Skip this component |The complete loop script

Here’s our production script:

#!/bin/bash

cd /path/to/your/repo

MAX_RUNS=${1:-10}

MAX_HOURS=${2:-4}

STOP_FILE=~/STOP_LOOP

PROMPT_FILE="./PROMPT.md"

rm -f "$STOP_FILE"

END_TIME=$(($(date +%s) + MAX_HOURS * 3600))

RUN=1

echo "Starting loop: $MAX_RUNS runs, $MAX_HOURS hours max"

echo "Stop with: touch $STOP_FILE"

while [ $RUN -le $MAX_RUNS ] &&

[ ! -f "$STOP_FILE" ] &&

[ $(date +%s) -lt $END_TIME ]; do

echo "=== Run $RUN of $MAX_RUNS ==="

cat "$PROMPT_FILE" | claude -p --dangerously-skip-permissions

((RUN++))

sleep 5

done

echo "Completed $((RUN-1)) runs"

# Generate summary

cat << 'EOF' | claude -p --dangerously-skip-permissions

Summarize the last 10 git commits:

1. What components were changed

2. Any pending manual tests

3. Any failures to investigate

EOFKey takeaways

- Prompts need enforcement, not just instructions - Use MANDATORY, STOP, and NOW

- Debug the loop, not just the output - Watch what the agent actually does

- Build bridges to manual testing - Not everything can be automated

- Multiple safety nets - Max runs + max time + stop file

- End with a summary - Know what to do next without reading every commit

The agent isn’t replacing developers—it’s a tireless junior developer who needs clear instructions, guardrails, and supervision. Give it those, and it becomes genuinely useful.

Enjoyed this article?

Get notified about new posts and product updates.

Related Posts

Taming the Ralph: How to Run AI Coding Agents in a Loop

Learn how to safely run Claude Code in an infinite loop without destroying your codebase. Practical guardrails, git worktrees, and prompt engineering for autonomous AI coding.

Claude's Hidden Superpowers for Busy Founders

Reminders, calendar, memory, document creation - I just discovered Claude can do all this. Here's everything I didn't know after months of use.

Taming the Ralph Part 3: In Practice

After running an AI coding agent overnight, here are the actual performance numbers. 81% improvement in layout shift, 65% fewer long tasks, and what metrics actually matter.